Corpus Statistics

Title: ByteEthics: Decoding AI's Ethical Fabric

Why I Chose to Create this TEI Corpus?

The reason I specifically chose to work on this specifically curated TEI corpus titled ByteEthics to understand from a distant reading perspective, the urgent need to comprehend how emerging technologies challenge our traditional ethical frameworks.

While current AI technologies largely rely on non-conceptual methodologies, simulating logic through statistical patterns at a pace and efficiency that far exceed previous models, this acceleration and capability bring forth critical ethical dilemmas.

As AI systems become increasingly lifelike and capable of transcending human intellectual boundaries, we must ponder the implications.The assertion that systems like GPT possess an IQ of 155 significantly higher than the average human suggests an unprecedented potential for learning and evolution. But at the same time, this intelligence blurs the lines between reality and representation, echoing dilemmas that the 19th-century, but in a modern, digital context.

This blurring of boundaries raises profound questions not just for the elite but for society at large. The ethics of the virtual realm pose a unique challenge: Who defines these ethics? Are they an extension of human ethics, or do they adopt a machine-oriented ethics, perhaps with an anti-humanist stance ? The prospect of machines making ethical decisions for and about themselves represents a fundamental shift in our ethical landscape.

It underscores the importance of scrutinizing the statistical patterns that govern AI behavior, patterns that, if misdirected, could lead to significant harm and ethical breaches in both innovation and societal norms, including the rise of online crimes.

Steps & Challenges faced during Corpus Curation:

- ● I selected only recent articles on Digital ethics from 2017 till most recent essays published in 2024 as LLM have come out recently so I did not include older texts which were unrelated to the current scenario of AI.

- ● I wanted to include texts which would help me understand the evolution of Digital Ethics and AI revealing shifts in vocabulary, emerging concerns, and historical influences on discourse.

- ● Earlier I selected almost 55 books, but later realized how different they were in their formats and I did not wish to complicate my work as I wanted to start from a simpler corpus to have a better grip of steps involved in corpus curation.

- ● Therefore, I changed my approach for much simplicity and selected diverse essential articles and reading from some syllabus for courses in AI Ethics,

- ● My present corpus, after many revisions of selecting specifically relatable articles, consist of only 24 files, with 23 articles and one book, which is the Oxford University Handbook in Digital Ethics

- ● The initial conversion from pdf to txt was much easier through a single python script.I was able to convert all my articles, except the conversion failed for the book that I really wanted in my corpus, so even after many trials it failed python code gave process output

as killed in between the conversion of other files , so I had to remove that book from my corpus and let all other files be processed first.

- ● Then later for the book on not finding any solution I imported PyMuPDF library in my code to convert it to txt, the structure did not look as good as my other files but I needed it for my corpus so I accepted it as it was.

- ● For my TEI files conversion I specifically struggled with a few files, for which getting the right code to tag the entire structure correctly was difficult, I kept my approach simple for most of the files, taking the code from class slides getting the TEI HEADER at the top with extracted metadata structure was bit difficult, like for instance for my Oxford Handbook, I couldn't capture proper paragraph tags all I could do was at get basic TEI header and least get basic chapter headings.

- ● Sometimes I got errors with TEI output files with only TEI header in xml file with no text body tagged, so I had to check manually in the code, and check what the issue was and clear and finally could get a corpus with a TEI header with metadata of author, title and year for each file.

- ● I wrote 24 python scripts, even copying some things from one another but I kept the separate so that each code catered to the unique needs of structural elements of each file.

- ● Naming the files with the same name and for keeping track of my conversions and also for knowing which file is where I kept the name of all the files the same from PDF to TXT to TEI python script files as well as the output xml files.

Report: ByteEthics: Decoding AI's Ethical Fabric

Findings presented from analysis with Philoload:

I employed Philologic to search for specific words and phrases to grasp the central issues driving scholarly debates about AI Ethics. Interestingly, in my quest for deeper ethical insights into artificial intelligence, distant reading provided me with a summary of some key points that helped me answer my research question:

- ● Based on searching for the term "artificial intelligence" I found the key idea underlying the debate of AI being the trust people put in AI without understanding how it is built.➢ There is a huge potential for artificial intelligence to become a tyrannical ruler of us all, not because it is actually malicious, but because it will become increasingly easier for humans to trust Als to make decisions and increasingly more difficult to detect and remove biases from Als.

- This is based on searching for term “data” on which AI is trained, which leads further to ethical dilemmas associated with it is based on:➢ However, even though AI (artificial intelligence) is indisputably one of the main characters of the digital age, the term is slightly misleading. Much of the ethical problems arise from technologies that are not AI. Sometimes it’s a badly designed spreadsheet that causes the problem, or intrusive data collection.

- The word “data” I found was in collocations to be mostly associated with “big data” in a way hinting on the influence of big data companies controlling vast amounts of data, which grants them the power to shape how data is analyzed, understood, and used in various contexts.

- While reading about data I observed the emphasis on word “transparency” so I went ahead to search for it:➢ Trust in the technology should be complemented by trust in those producing the technology. Yet such trust can only be gained if companies are transparent about their data usage policies and the design choices made while designing and developing new products. If data are needed to help AI make better decisions, it is important that the human providing the data is aware of how his/her data are handled, where they are stored, and how they are used.

- Then I went into looking for algorithms which work on these data, here are some interesting insights:

- Much like a poem, algorithms are tricky objects to know and often cannot even reveal their ownworkings.Critical research thus attends less to what an algorithm is and more to what it does.

- Yet, algorithms can inherit questionable values from the datasets and acquire biases in the course of (machine) learning, and automated algorithmic decision-making makes it more difficult for people to see algorithms as biased.

- Next I searched for word “bias” in relation to algorithmic bias:

➢ Bias Awareness and Mitigation Bias detection and mitigation are also fundamental in achievingtrust in AI. Bias can be introduced through training data, when it is not balanced and inclusive. - Then I looked for “harm” to understand the consequences of different biases in technological systems:

- While the objects may be virtual, the harm suffered is real.

- The possible harm from algorithmic bias can be enormous as algorithmic decision-making becomes increasingly common in everyday life for high-stake decisions, e.g. parole decisions, policing, university admission, hiring, insurance and credit rating, etc.

- Further I searched for more ethical insights regarding AI:

- Virtue ethics emphasizes the importance of developing moral character.

- Thus, virtue ethics primary action guidance—what it tells us to do—is largely preparatory: to practice building specific traits of moral excellence into one’s habits of digital behavior and to cultivate practical judgment in reading the shifting moral dynamics of digital environments.

- Virtue ethics does take action outcomes as relevant to particular moral choices.

- While we continue to work on gender, racial, disability and other inclusive lenses in tech development, the continued lack of equity and representation in the tech community and thus tech design (especially when empowered by lots of rich, able-bodied white men) will continue to create harm for people living on the margins.

- The advocates of technologically-driven social isolation and trivialization will have to explain why — in the age of greatest access to information in history — we see a constant decline in knowledge and in the capacity to analyze information

● Next I wanted understand the consequences of these choices on society and individual:

- While we continue to work on gender, racial, disability and other inclusive lenses in tech development, the continued lack of equity and representation in the tech community and thus tech design (especially when empowered by lots of rich, able-bodied white men) will continue to create harm for people living on the margins.

- The advocates of technologically-driven social isolation and trivialization will have to explain why — in the age of greatest access to information in history — we see a constant decline in knowledge and in the capacity to analyze information

● Finally I wanted to understand its AI effect on future generations to come :

➢ Most of the experts who are concerned about the future of health and well-being focused on the possibility that the current challenges posed by AI-facilitated social media feeds to mental and physical health will worsen. They also highlighted fears that these trends would take their toll: more surveillance capitalism; group conflict and political polarization; information overload; social isolation and diminishment of communication competence; social pressure; screen-time addictions; rising economic inequality; and the likelihood of mass unemployment due to automation

● The final idea here being that of understanding the key concerns and major stakeholders behind AI and where could it lead humanity to:

- The corporate takeover of the country’s soul — profit over people — will continue to shape product design, regulatory loopholes and the systemic extraction of time, attention and money from the population.

- They are concerned that the world will be divided into warring cyber- blocks.

- It’s already clear that immense human data production coupled with biometrics and video surveillance can create environments that severely hobble basic human freedoms. Even more worrisome is that the sophistication of digital technologies could lead techno-authoritarian regimes to be so effective that they even cripple prospects for public feedback, resistance and protest, and change altogether.

- There are other reasons to expect digital technology to become more individualized and vivid.Algorithmic recommendations are likely to become more accurate (however accuracy is defined), and increased data, including potentially biometric, physiological, synthetic and even genomic data may feature into these systems. Meanwhile, bigger screens, clever user experience design, and VR and AR technologies could make these informational inputs feel all the more real and pressing.

- Pessimistically speaking, this means that communities that amplify our worst impulses and prey upon our weaknesses, and individuals that preach misinformation and hate are likely to be more effective than ever in finding and persuading their audiences. Fortunately, there are efforts to dissipate in combat these trends in current and emerging areas of digital life, but several decades.

- Dis- and misinformation will be on the rise by 2035, but they have been around ever since humans first emerged on Earth. One of the biggest challenges now is that people do not follow complicated narratives — they don’t go viral, and science is often complicated.

- We will need to find ways to win people over, despite the preference of algorithms and people for simple, one-sided narratives. We need more people-centered approaches to remedy the challenges of our day. Across communities and nations, we need to internally acknowledge the troubling events of history and of human nature, and then strive externally to be benevolent, bold and brave in finding ways wherever we can at the local level across organizations or sectors or communities to build bridges. The reason why is simple: We and future generations deserve such a world.

- I do think there will be a cultural counterbalance that emerges, at what point I can’t guess, toward less digital reliance overall, but this will be left to the individual or family unit to foment, rather than policymakers, educators, civic leaders or other institutions.

Conclusion:

- This exercise of looking for various words relating to my research topic really helped me uncover the fundamentals in decoding the moral fabric of AI using distant reading. I found this approach extremely helpful in going through the entire corpus and understanding the significance of various ethical dilemmas and their roots along with consequences and solutions to look into.

Use of Vector-Space Similarity Model:

I used the code we used in class and modified it accordingly for my use case, one issue that I faced was that of exceeding maximum limit of characters that spaCy allows, so I had to look for solution to accommodate this issue and the solution I found was to add this to my code for increasing number of characters intake as maximum allowed by default is 1,000,000 characters:

➢ nlp.max_length = 2500000

- I found TF-IDF(Term Frequency-Inverse Document Frequency,) scores helpful in aiding my understanding about how numerical statistics can help me reflect how important a word is to a document in a collection or corpus.

- Score of top twenty words from each document was helpful to understand the rankwise relevance of words from each file also helping me understand the key idea about the text.

- Other then that: what things I experimented a bit with the matrix to look for the top ten most repetitive word throughout the corpus :

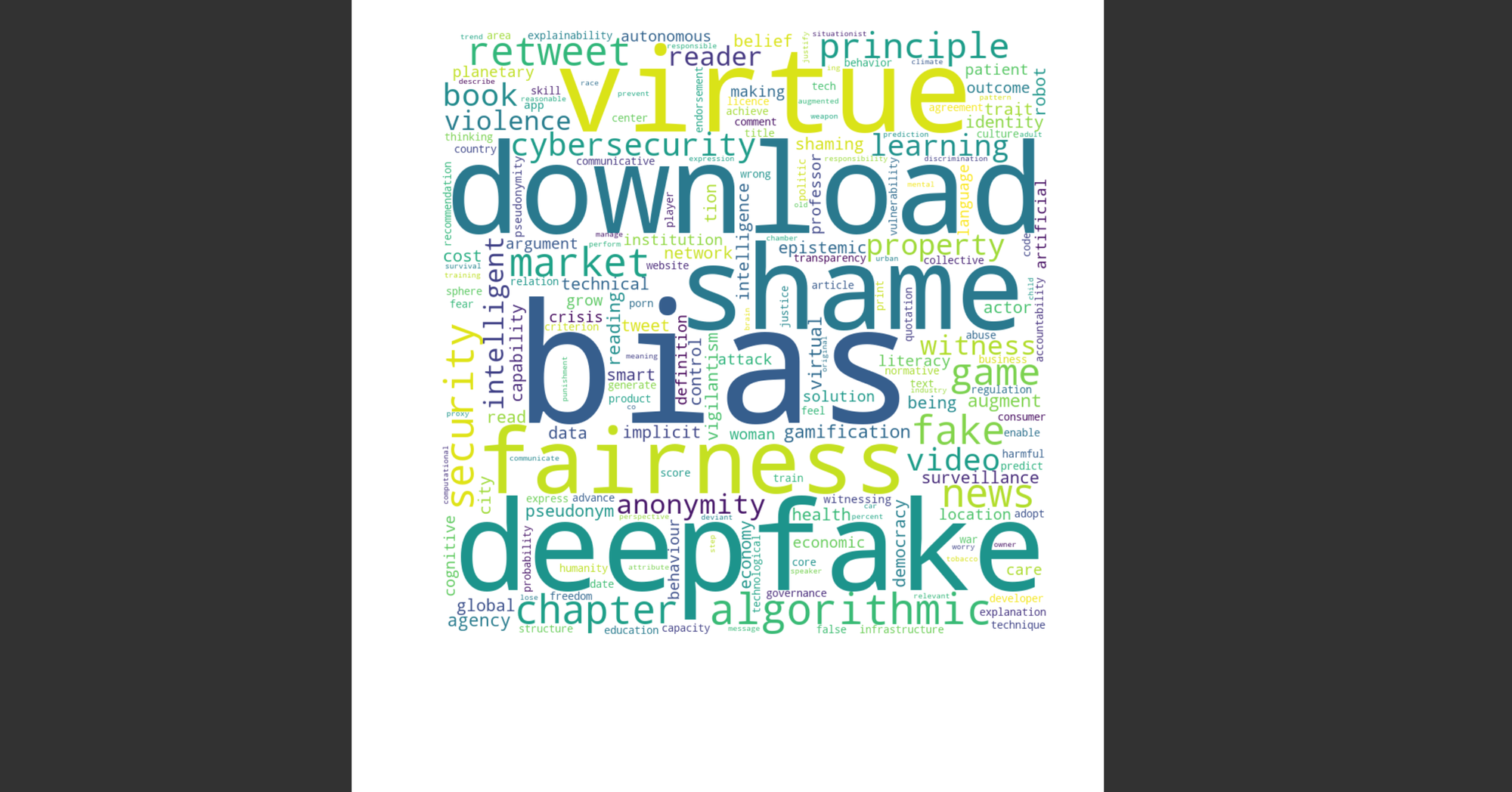

The most repetitive word in the corpus being “bias” implied on the significance to deal with bias, to improve data collection processes which further shape the algorithmic outputs and AI responses..

In exploring the role of cosine similarity which measures how similar two documents are irrespective of their size, focusing on the orientation rather than the magnitude of the vectors in a high-dimensional space, helped me understand how relatable were the documents in my corpus to each other.

Through cosine similarity I tried getting a core for the top ten documents and their scores for high relevance in the corpus the top two results were:

- ➢ Anderson-ClosingthoughtsChatGPT-2023_images & Anderson-Themes-2023_images: 0.511186024897061

- ➢ Digital Ethics Oxford_images & Anderson-Themes-2023_images: 0.38069598348061595